Ceph集群部署之ceph-deploy工具自动化安装

一、Ceph安装方法

1.1 手动安装manual

官网教程:https://docs.ceph.com/en/pacific/install/index_manual/

1.2 cephadm安装方式支持命令行CLI和GUI图形化(未来趋势)

官网教程:https://docs.ceph.com/en/pacific/cephadm/#

cephadm is new in Ceph release v15.2.0 (Octopus) and does not support older versions of Ceph. O版本的Ceph建议使用cephadm安装方式,老版本Ceph不支持。

1.3 ceph-deploy自动化安装

第三方参考手册:https://www.kancloud.cn/willseecloud/ceph/1788301

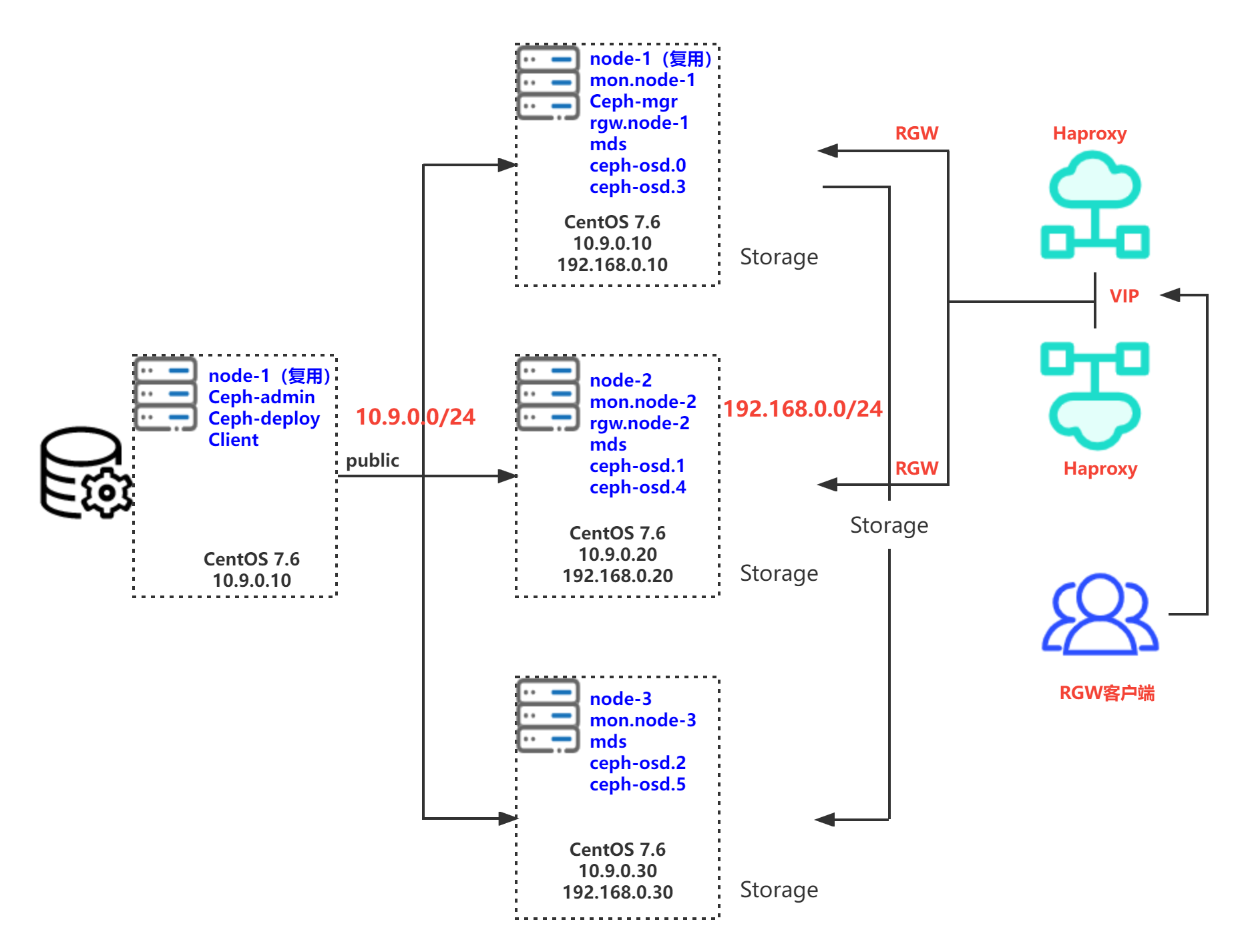

二、安装部署拓扑

拓扑说明:Ceph集群部署的过程中,我为了节省资源,使用了UCloud 3台云主机和UCloud弹性网卡(每台云主机2块弹性网卡,分别对应10.9.0.0/24业务段和192.168.0.0/24存储段两个网段);每台云主机挂载两块UCloud云盘数据盘,用于存储块分配;VIP地址可以通过UCloud平台申请,非常方便。

节点角色说明:

- ceph-deploy:ceph集群部署节点,负责集群整体部署,也可以复用cpeh集群中的节点作为部署节点。

- monitor:Ceph监视管理节点,承担Ceph集群重要的管理任务,一般需要3或5个节点。

- mgr: Ceph 集群管理节点(manager),为外界提供统一的入口。

- rgw: Ceph对象网关,是一种服务,使客户端能够利用标准对象存储API来访问Ceph集群

- mds:Ceph元数据服务器,MetaData Server,主要保存的文件系统服务的元数据,使用文件存储时才需要该组件

- osd:Ceph存储节点Object Storage Daemon,实际负责数据存储的节点。

三、基础环境准备

3.1 配置hosts解析

[root@node-X ~]# cat /etc/hosts 10.9.0.10 node-1 10.9.0.20 node-2 10.9.0.30 node-3

3.2 node-1管理节点配置免密登录node-2、node-3

[root@node-1 ~]# ssh-keygen [root@node-1 ~]# ssh-copy-id node-2 [root@node-1 ~]# ssh-copy-id node-3

3.3 关闭selinux与Firewalls

# 关闭selinux [root@node-X ~]# getenforce Disabled # 关闭Firewalls [root@node-X ~]# systemctl stop firewalld.service [root@node-X ~]# systemctl disable firewalld.service

四、NTP时间同步

4.1 配置node-1为ntp server

# 安装NTP服务器 [root@node-X ~]# yum install ntp -y # 配置NTP服务器 [root@node-1 ~]# vim /etc/ntp.conf server 10.42.255.1 iburst server 10.42.255.2 iburst [root@node-1 ~]# systemctl restart ntpd.service [root@node-1 ~]# systemctl enable ntpd.service # 注:这里我们使用的UCloud提供的内网NTP服务器~ # 查看时间偏移情况 [root@node-1 ~]# ntpq -pn remote refid st t when poll reach delay offset jitter ============================================================================== +10.42.255.1 83.168.200.199 3 u 12 64 1 0.881 -0.057 0.064 *10.42.255.2 182.92.12.11 3 u 10 64 1 0.912 -12.724 0.040

4.2 配置node-2、node-3指定NTP Server node-1

# 安装NTP服务器 [root@node-X ~]# yum install ntp -y [root@node-X ~]# vim /etc/ntp.conf server 10.9.0.10 iburst [root@node-X ~]# systemctl restart ntpd.service [root@node-X ~]# systemctl enable ntpd.service [root@node-X ~]# ntpq -pn remote refid st t when poll reach delay offset jitter ============================================================================== 10.9.0.10 10.42.255.2 4 u 1 64 0 0.000 0.000 0.000

五、配置Ceph的yum源

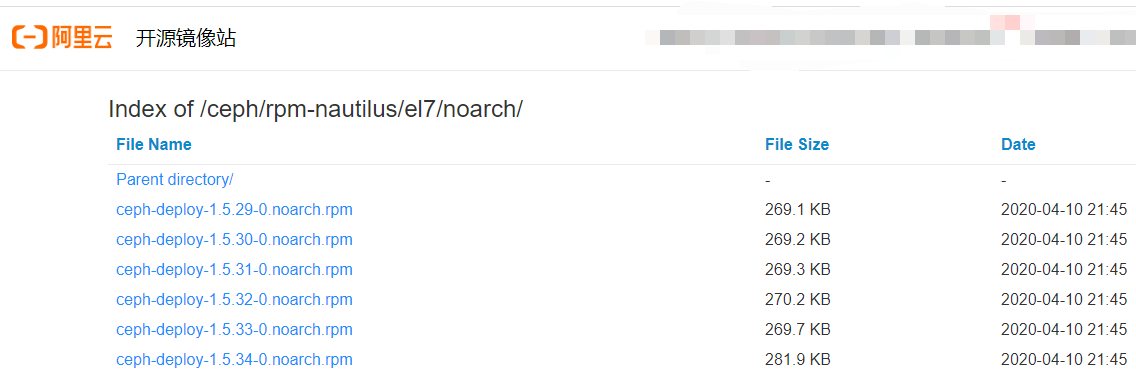

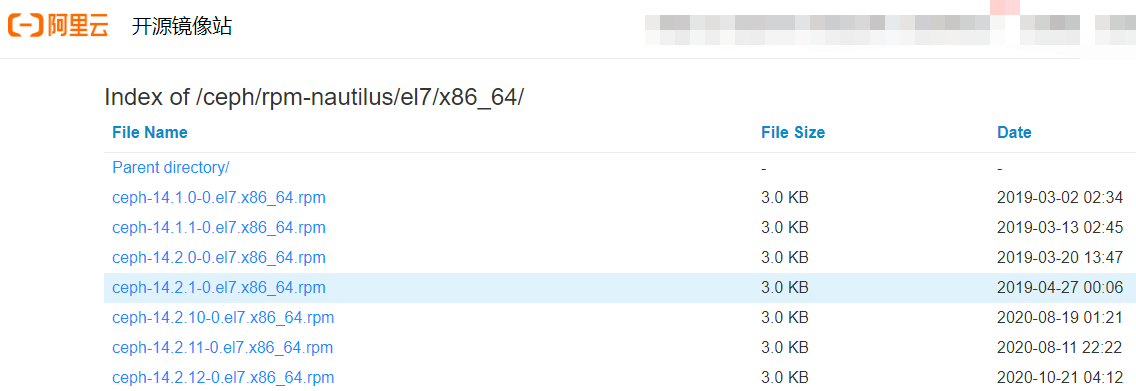

- 注1:这里使用了阿里云的epel源、ceph源。https://developer.aliyun.com/mirror/

- 注2:ceph-deploy工具:https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/

[root@node-1 ~]# vim /etc/yum.repos.d/ceph.repo [norch] name=norch baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/ enabled=1 gpgcheck=0 [x86_64] name=x86_64 baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64/ enabled=1 gpgcheck=0 [root@node-1 ~]# scp /etc/yum.repos.d/ceph.repo root@node-2:/etc/yum.repos.d/ceph.repo [root@node-1 ~]# scp /etc/yum.repos.d/ceph.repo root@node-3:/etc/yum.repos.d/ceph.repo [root@node-X ~]# yum list

六、安装Ceph Deploy

6.1 node-1安装python-setuptools和ceph-deploy

[root@node-1 ~]# yum install python-setuptools ceph-deploy -y

6.2 ceph-deploy版本要求

[root@node-1 ~]# ceph-deploy -h usage: ceph-deploy [-h] [-v | -q] [--version] [--username USERNAME] [--overwrite-conf] [--ceph-conf CEPH_CONF] COMMAND ... Easy Ceph deployment -^- / \ |O o| ceph-deploy v2.0.1 ).-.( '/|||\` | '|` | '|` Full documentation can be found at: http://ceph.com/ceph-deploy/docs # 注:一定要安装ceph-deploy2.0版本,否则安装M版ceph会有问题~

七、部署monitor节点

7.1 创建集群网络与指定monitor节点

[root@node-1 ~]# mkdir ceph-deploy [root@node-1 ~]# cd ceph-deploy/ [root@node-1 ceph-deploy]# ceph-deploy new --public-network 10.9.0.0/16 --cluster-network 192.168.0.0/24 node-1 # --public-network集群入口网络 # --cluster-network集群内部数据同步网络 # node-1是monitor节点

7.2 查看生成的文件

# 执行完(1)的命令会生成以下文件 [root@node-1 ceph-deploy]# ll total 12 -rw-r--r-- 1 root root 255 Apr 14 15:26 ceph.conf -rw-r--r-- 1 root root 3015 Apr 14 15:26 ceph-deploy-ceph.log -rw------- 1 root root 73 Apr 14 15:26 ceph.mon.keyring # ceph配置信息 [root@node-1 ceph-deploy]# cat ceph.conf [global] fsid = 9b2c4474-bf4c-4023-992f-e1beceede003 public_network = 10.9.0.0/16 cluster_network = 192.168.0.0/24 mon_initial_members = node-1 mon_host = 10.9.0.10 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx # 身份验证信息 [root@node-1 ceph-deploy]# cat ceph.mon.keyring [mon.] key = AQCIzFdiAAAAABAA7j7vrgUcUbGoCkaLY/7iZg== caps mon = allow *

7.3 ceph基础软件安装

- ceph-deploy这块自动安装会修改自定义yum源,指向国外,影响按照速度,所以这里使用手动安装方式取代~

[root@node-X ~]# yum install ceph ceph-mon ceph-mgr ceph-radosgw ceph-mds -y # X代表三个节点都需要安装~

- 针对monitor进行初始化

[root@node-1 ceph-deploy]# ceph-deploy mon create-initial # 查看初始化生成的秘钥文件 [root@node-1 ceph-deploy]# ls -l total 44 -rw------- 1 root root 113 Apr 14 15:38 ceph.bootstrap-mds.keyring -rw------- 1 root root 113 Apr 14 15:38 ceph.bootstrap-mgr.keyring -rw------- 1 root root 113 Apr 14 15:38 ceph.bootstrap-osd.keyring -rw------- 1 root root 113 Apr 14 15:38 ceph.bootstrap-rgw.keyring -rw------- 1 root root 151 Apr 14 15:38 ceph.client.admin.keyring # admin秘钥 # 将admin秘钥配置文件copy到各节点 [root@node-1 ceph-deploy]# ceph-deploy admin node-1 node-2 node-3 # 查看初始化完毕后的集群 [root@node-1 ceph-deploy]# ceph -s cluster: id: 9b2c4474-bf4c-4023-992f-e1beceede003 health: HEALTH_WARN mon is allowing insecure global_id reclaim services: mon: 1 daemons, quorum node-1 (age 4m) mgr: no daemons active osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs:

7.4 查看初始化完毕后的集群

[root@node-1 ceph-deploy]# ceph -s cluster: id: 9b2c4474-bf4c-4023-992f-e1beceede003 health: HEALTH_WARN mon is allowing insecure global_id reclaim services: mon: 1 daemons, quorum node-1 (age 4m) mgr: no daemons active osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs:

7.5 安装manager daemon监控节点

[root@node-1 ceph-deploy]# ceph-deploy mgr create node-1 [root@node-1 ceph-deploy]# ceph -s cluster: id: 9b2c4474-bf4c-4023-992f-e1beceede003 health: HEALTH_WARN mon is allowing insecure global_id reclaim services: mon: 1 daemons, quorum node-1 (age 16m) mgr: node-1(active, since 4s) # 成功添加了一个mgr节点 osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs:

八、部署OSD节点

8.1 磁盘添加到OSD

# vdb、vdc两块盘,专门用于做OSD的 [root@node-1 ceph-deploy]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vda 253:0 0 20G 0 disk └─vda1 253:1 0 20G 0 part / vdb 253:16 0 20G 0 disk vdc 253:32 0 20G 0 disk #(1)将node-1的vdb盘添加搭配OSD中 [root@node-1 ceph-deploy]# ceph -s cluster: id: 9b2c4474-bf4c-4023-992f-e1beceede003 health: HEALTH_WARN # 告警osd数量小于3 OSD count 1 < osd_pool_default_size 3 mon is allowing insecure global_id reclaim services: mon: 1 daemons, quorum node-1 (age 79m) mgr: node-1(active, since 62m) osd: 1 osds: 1 up (since 79s), 1 in (since 79s) # 已经成功添加1个osd data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 1.0 GiB used, 19 GiB / 20 GiB avail pgs: #(2)将node-2、node-3的vdb盘添加搭配OSD中 [root@node-1 ceph-deploy]# ceph-deploy osd create node-2 --data /dev/vdb [root@node-1 ceph-deploy]# ceph-deploy osd create node-3 --data /dev/vdb

8.2 禁用不安全模式

# 再次查看ceph集群状态 [root@node-1 ceph-deploy]# ceph osd tree ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF -1 0.05846 root default -3 0.01949 host node-1 0 hdd 0.01949 osd.0 up 1.00000 1.00000 -5 0.01949 host node-2 1 hdd 0.01949 osd.1 up 1.00000 1.00000 -7 0.01949 host node-3 2 hdd 0.01949 osd.2 up 1.00000 1.00000 [root@node-1 ceph-deploy]# ceph -s cluster: id: 9b2c4474-bf4c-4023-992f-e1beceede003 health: HEALTH_WARN # 注此处还有告警 mon is allowing insecure global_id reclaim services: mon: 1 daemons, quorum node-1 (age 86m) mgr: node-1(active, since 69m) osd: 3 osds: 3 up (since 64s), 3 in (since 64s) data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 57 GiB / 60 GiB avail pgs: # 禁用不安全模式后,集群恢复正常 [root@node-1 ceph-deploy]# ceph config set mon auth_allow_insecure_global_id_reclaim false [root@node-1 ceph-deploy]# ceph -s cluster: id: 9b2c4474-bf4c-4023-992f-e1beceede003 health: HEALTH_OK services: mon: 1 daemons, quorum node-1 (age 88m) # 1个monitor mgr: node-1(active, since 71m) # 1个manager daemon osd: 3 osds: 3 up (since 2m), 3 in (since 2m) # 3个osd data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 57 GiB / 60 GiB avail pgs:

九、扩展mon和mgr

9.1 扩容mon

- monitor在ceph集群中至关重要,作为整个集群的控制中心,其包含整个集群的状态信息,所以要确保monitor高可用。一般部署奇数个~

[root@node-1 ceph-deploy]# ceph-deploy mon add node-2 --address 10.9.0.20

[root@node-1 ceph-deploy]# ceph-deploy mon add node-3 --address 10.9.0.30

[root@node-1 ceph-deploy]# ceph quorum_status --format json-pretty

{

"election_epoch": 12,

"quorum": [

0,

1,

2

],

"quorum_names": [

"node-1",

"node-2",

"node-3"

],

"quorum_leader_name": "node-1", # monitor leader

"quorum_age": 195,

"monmap": {

"epoch": 3,

"fsid": "9b2c4474-bf4c-4023-992f-e1beceede003",

"modified": "2022-04-14 17:34:08.183735",

"created": "2022-04-14 15:38:19.378891",

"min_mon_release": 14,

"min_mon_release_name": "nautilus",

"features": {

"persistent": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus"

],

"optional": []

},

"mons": [ # monitor地址信息

{

"rank": 0,

"name": "node-1",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.9.0.10:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.9.0.10:6789",

"nonce": 0

}

]

},

"addr": "10.9.0.10:6789/0",

"public_addr": "10.9.0.10:6789/0"

},

{

"rank": 1,

"name": "node-2",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.9.0.20:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.9.0.20:6789",

"nonce": 0

}

]

},

"addr": "10.9.0.20:6789/0",

"public_addr": "10.9.0.20:6789/0"

},

{

"rank": 2,

"name": "node-3",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.9.0.30:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.9.0.30:6789",

"nonce": 0

}

]

},

"addr": "10.9.0.30:6789/0",

"public_addr": "10.9.0.30:6789/0"

}

]

}

}[root@node-1 ceph-deploy]# ceph -s

cluster:

id: 9b2c4474-bf4c-4023-992f-e1beceede003

health: HEALTH_OK

services:

mon: 3 daemons, quorum node-1,node-2,node-3 (age 7m) # 3个monitor节点扩容成功

mgr: node-1(active, since 106m)

osd: 3 osds: 3 up (since 37m), 3 in (since 37m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 57 GiB / 60 GiB avail

pgs:

# 查看monitor状态方法一

[root@node-1 ceph-deploy]# ceph mon stat

e3: 3 mons at {node-1=[v2:10.9.0.10:3300/0,v1:10.9.0.10:6789/0],node-2=[v2:10.9.0.20:3300/0,v1:10.9.0.20:6789/0],node-3=[v2:10.9.0.30:3300/0,v1:10.9.0.30:6789/0]}, election epoch 12, leader 0 node-1, quorum 0,1,2 node-1,node-2,node-3

# 查看monitor状态方法二

[root@node-1 ceph-deploy]# ceph mon dump

epoch 3

fsid 9b2c4474-bf4c-4023-992f-e1beceede003

last_changed 2022-04-14 17:34:08.183735

created 2022-04-14 15:38:19.378891

min_mon_release 14 (nautilus)

0: [v2:10.9.0.10:3300/0,v1:10.9.0.10:6789/0] mon.node-1

1: [v2:10.9.0.20:3300/0,v1:10.9.0.20:6789/0] mon.node-2

2: [v2:10.9.0.30:3300/0,v1:10.9.0.30:6789/0] mon.node-3

dumped monmap epoch 39.2 扩容mgr

- mgr正常也是一个master多个standby

[root@node-1 ceph-deploy]# ceph-deploy mgr create node-2 node-3 [root@node-1 ceph-deploy]# ceph -s cluster: id: 9b2c4474-bf4c-4023-992f-e1beceede003 health: HEALTH_OK services: mon: 3 daemons, quorum node-1,node-2,node-3 (age 19m) mgr: node-1(active, since 118m), standbys: node-2, node-3 # 1个主2个standby,总共3个mgr节点添加成功 osd: 3 osds: 3 up (since 49m), 3 in (since 49m) data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 57 GiB / 60 GiB avail pgs:

作者:UStarGao

链接:https://www.starcto.com/storage_scheme/302.html

来源:STARCTO

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

UCloud云平台推荐

随便看看

- 2023-05-07如何快速部署ChatGPT应用并绕开限制

- 2021-08-09MongoDB副本集搭建教程

- 2024-01-12Linux Screen命令提升运维效率

- 2021-02-03MySQL常用参数

- 2022-07-13极简了解Ceph存储架构